Utilizing best web scraping tools is crucial for extracting this data. In the era dominated by data being able to gather information from the internet swiftly can offer a notable edge across different domains like market analysis studying competitors monitoring social media activities and beyond. This piece will delve into a selection of notch free web scraping tools to assist you in selecting the most suitable one, for your requirements.

What Is a Web Scraping Tool?

A web scraping tool is a program created to collect data from websites. These tools mimic human browsing behavior to gather pieces of information from web pages and transform them into organized data that has multiple applications. Whether its, for retrieving product information stock prices or social media posts web scraping tools skip the extraction step saving time and improving precision.

How to Choose a Tool for Web Scraping?

Choosing the right web scraping tool involves several considerations:

Ease of Use: The tool needs to feature a to use interface that caters to users, with different levels of programming expertise.

Data Extraction Capabilities: It should have the ability to extract types of data such, as text and images and navigate through intricate website designs that include AJAX and JavaScript functionalities.

Speed and Efficiency: It is essential to extract data while minimizing the impact, on system resources.

Scalability: The tool needs to be able to manage amounts of data and handle numerous projects without any decrease, in performance.

Cost: This article mainly discusses tools but its crucial to recognize the constraints of their free versions. Certain tools provide upgraded features in their paid versions.

Octoparse

Octoparse is a choice among tools that don’t require coding providing a program that can gather unorganized data from any site and arrange it neatly into structured sets. The interface is easy to use catering to those, without skills making it simple to define data extraction tasks with just a few clicks.

Pros:

- Scraping made easy without any coding

- Abundance of integrations available

- Access to both a free plan and a free trial for advanced features

- Documentation and help center offered in multiple languages including Spanish, Chinese, French, and Italian

- Support for OpenAPI

- Handles scraping challenges on your behalf

Cons:

- No Linux support

- Some hard-to-understand features

Features:

- Automated looping features

- Numerous templates available for scraping data from well-known websites

- Web scraping assistant powered by AI

- Cloud automation operating 24/7 for scheduling scrapers

- Addressing scraping challenges including IP rotation and CAPTCHA solving

- Automated handling of IP rotation and CAPTCHA solving

- Support for infinite scrolling, pagination, dropdown menus, hover actions, and various other simulations

Main Goal:

Create a computer program designed for people without expertise to help with extracting information, from websites and also include sophisticated features for developers to connect with other tools seamlessly.

Platforms: Windows, macOS

Integrations:

- Zapier

- Google Drive

- Google Sheets

- Custom proxy providers

- Cloudmersive API

- Airtable

- Dropbox

- Slack

- Hubspot

- Salesforce

ScrapingBee

ScrapingBee aims to make web scraping easier by managing browsers and proxies making it a great tool for extracting data from websites with JavaScript heavy content. The complimentary plan offers an amount of API requests per month ideal, for smaller or medium sized tasks. Its API is simple to incorporate into tools offering developers a versatile solution.

Pros:

- Billing based solely on successful requests

- Comprehensive documentation complemented by numerous blog posts

- Simplified setup for scraping endpoints

- Abundance of features

- Highly effective across a wide range of websites

Cons:

- Not the most rapid scraping API available

- Constrained concurrency capabilities

- Demands technical expertise

Features:

- Compatibility with interactive websites necessitating JavaScript execution

- Automated anti-bot circumvention, encompassing CAPTCHA resolution

- Tailorable headers and cookies

- Geographic precision in targeting

- Intercepting XHR/AJAX requests

- Exporting data in various formats such as HTML, JSON, and XML

- Scheduling scraping API calls

Main Goal: Provide an access point for developers to easily retrieve information from any webpage.

Platforms: Windows, macOS, Linux

Integrations:

- Any HTTP client

- Any web scraping library

Apify

Apify provides a blend of tools for web scraping, automation and data integration. The complimentary plan includes a SDK for creating scraping actors, storage features and a scheduling tool. Apify is especially handy, for individuals aiming to expand their scraping activities or link scraping with cloud services.

Pros:

- No-code web scraping task definition

- Cross-platform

- Intuitive UI and UX

- Seamless cloud integration

Cons:

- CPU-intensive

- Not suitable for large-scale operations

Features:

- Scheduled executions

- Automated IP rotation

- Compatibility with interactive websites

- Support for conditionals and expressions

- Compatibility with XPath, RegEx, and CSS selectors

- Automated extraction of table data

- Extraction of data from node text and HTML attributes

- REST API and webhook integration

Main Goal: Provide a computer program that allows people without coding skills to easily scrape information, from websites.

Platforms: Windows, macOS, Linux

Integrations:

- ParseHub cloud platform to store data

- HTTP clients via the ParseHub REST API

- Dropbox

- Amazon S3 storage

ScraperAPI

ScraperAPI manages proxies, browsers and CAPTCHAs enabling users to concentrate on data extraction. It is perfect for developers seeking to evade scraping safeguards on websites. The complimentary tier provides a number of API requests with extra functionalities accessible, in premium bundles.

Pros:

- Comprehensive documentation available in multiple programming languages

- Serving over 10,000 clients

- Access to free webinars, case studies, and resources for tool initiation

- Unlimited bandwidth allocation

- Assurance of 99.9% uptime

- Provision of professional support

Cons:

- Global geotargeting exclusively offered in the Business plan

- Technical expertise is a prerequisite

Features:

- Ability to render JavaScript

- Integration with premium proxies

- Automated parsing of JSON data

- Intelligent rotation of proxies

- Customizable headers

- Automatic retry mechanism

- Compatibility with custom sessions

- Bypassing CAPTCHA and anti-bot detection

Main Goal: Provide a scraping endpoint that enables developers to gather information from any webpage, in a single location.

Platforms: Windows, macOS, Linux

Integrations:

- Any HTTP client

- Any web scraping library

Playwright

Microsoft created Playwright, a node library that enables developers to automate Chromium, Firefox and WebKit using one API. It has a mode and can execute scripts, for scraping modern web apps. This tool is widely used by JavaScript developers because its free and open source.

Pros:

- Currently the comprehensive browser automation solution, on the market

- Created and consistently updated by Microsoft

- Works well on platforms, browsers and coding languages.

- Contemporary, swift, and effective

- Proudly presenting a variety of functions, like pauses, visual troubleshooting, retry options, customizable reports and additional capabilities

- API designed to be intuitive and consistent across languages

Cons:

- Not so easy to set up

- Mastering all its features takes time

Main Goal: Automate tasks in a web browser, by simulating user interactions through programming.

Platforms: Windows, macOS, Linux

Features:

- Exploring how web browsers interact, including navigating, filling out forms and fetching data.

- APIs facilitating clicking, typing, form completion, and additional actions

- Support for both headed and headless modes

- Support, for running tests simultaneously on different web browsers.

- Inclusive debugging functionalities

- Embedded reporting mechanisms

- Auto-waiting API for seamless automation

Integrations:

- JavaScript and TypeScript

- Java

- .NET

- Python

- Chrome, Edge, Chromium-based browsers, Firefox, Safari, WebKit-based browsers

Data Scraper

Data Scraper, a Chrome extension provides a method to gather information from online pages and transfer it directly into a spreadsheet. The free version is user enabling individuals to extract data from one or multiple pages and store it in formats such as CSV. This tool is especially useful for individuals who’re not developers or require swift data extraction, without intricate configurations.

Pros:

- Even individuals, without programming expertise can easily navigate the user point and click interface.

- As a browser extension you can easily set it up. Start using it in just a few minutes without any complicated settings.

- Direct export to CSV or Excel simplifies the process of data analysis

- Users can perform basic scraping tasks without any investment

Cons:

- Using the Chrome extension limits its functionality to this browser, which may not be ideal, for users who prefer or need to use a different browser for their activities.

- The tool may not be the choice, for extensive data extraction tasks because it doesn’t have the advanced features commonly seen in broader web scraping tools.

- Sophisticated functionalities such, as connecting with APIs transforming data extensively and managing intricate websites (like those using a lot of JavaScript or CAPTCHA) are restricted or not available.

Main Goal: The main goal of the Data Scraper is to make it easier to gather information from websites and convert it into organized formats such as CSV files. It is specifically tailored for individuals seeking an efficient way to extract data without the need, for coding skills or navigating intricate web scraping tools.

Platforms: Windows, macOS, and Linux

Features:

- Users have the option to choose the data they want to extract from their browser by clicking on it.

Integrations:

- R, Python (using Pandas library)

- Excel, Google Sheets

- CSV files, such as SQL databases

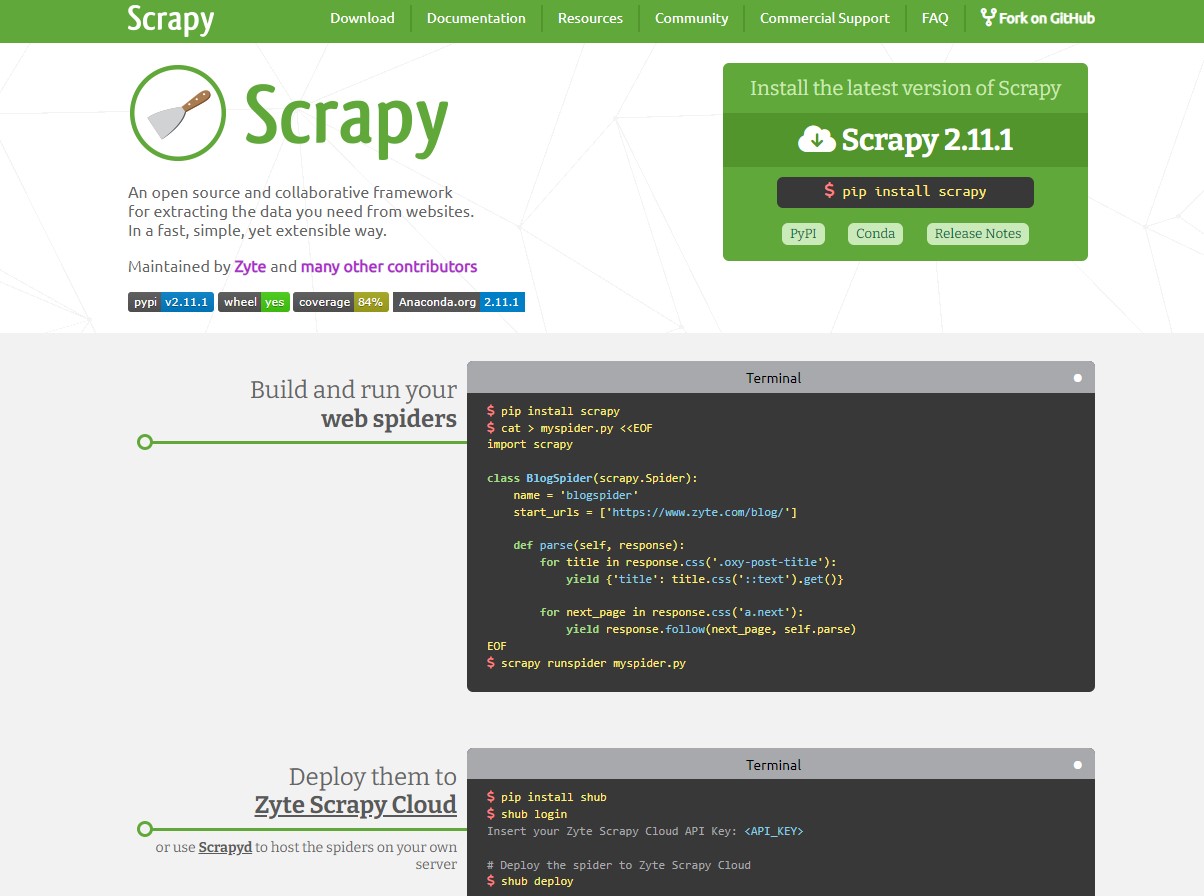

Scrapy

Scrapy, a scraping framework is open source and customizable. Developers use Python to create their spiders for web crawling and data extraction. With its design and robust features, for data extraction request handling and information processing Scrapy is well suited for extensive web scraping endeavors.

Pros:

- Fast crawling and scraping framework

- Ideal for retrieving large-scale data

- Efficient memory usage

- Highly adaptable

- Expandable through middleware

- Seamless web scraping journey

Cons:

- Integrating Splash is necessary for scraping interactive sites

- Lacks native browser automation features

- Has a steep learning curve

Main Goal: Provide an API, for web crawling. Scraping using Python with advanced features.

Platforms: Windows, macOS, and Linux

Features:

- Support for CSS selectors and XPath expressions

- Integrated HTML parser

- Built-in HTTP client

- Automatic crawling logic

- JSON parsing

Integrations:

- Python

- Splash

ParseHub

ParseHub is a tool for extracting data capable of handling websites that utilize JavaScript, AJAX, cookies and more. Its free version allows for up to five scraping projects. Provides a desktop application, for both Windows and macOS catering to users with no programming background.

Pros:

- Define web scraping tasks without coding

- Compatible across multiple platforms

- User-friendly interface and experience

- Effortlessly integrate with cloud services

Cons:

- CPU-intensive

- Not suitable for large-scale operations

Main Goal: Provide a desktop software that doesn’t need any coding knowledge making it easy for people, without skills to perform web scraping tasks.

Platforms: Windows, macOS, and Linux

Features:

- Scheduling automated runs

- IP rotation handled automatically

- Compatibility with interactive websites

- Support for conditionals and expressions

- Utilization of XPath, RegEx, and CSS selectors

- Automated extraction of table data

- Extraction from node text and HTML attributes

- Integration with REST API and web hooks

Integrations:

- Python

- Splash

Conclusion

Picking the best web scraping tools relies on what you require like how intricate the job is, how much data you’re dealing with and the type of websites you plan to scrape.

The tools mentioned earlier offer choices from basic browser addons to robust customizable frameworks that suit beginners and seasoned developers alike. By choosing the tool you can simplify your data gathering process guaranteeing you obtain the precise and timely data required for well informed choices.

Enhance your scraping journey with IPWAY. Begin with a free trial.