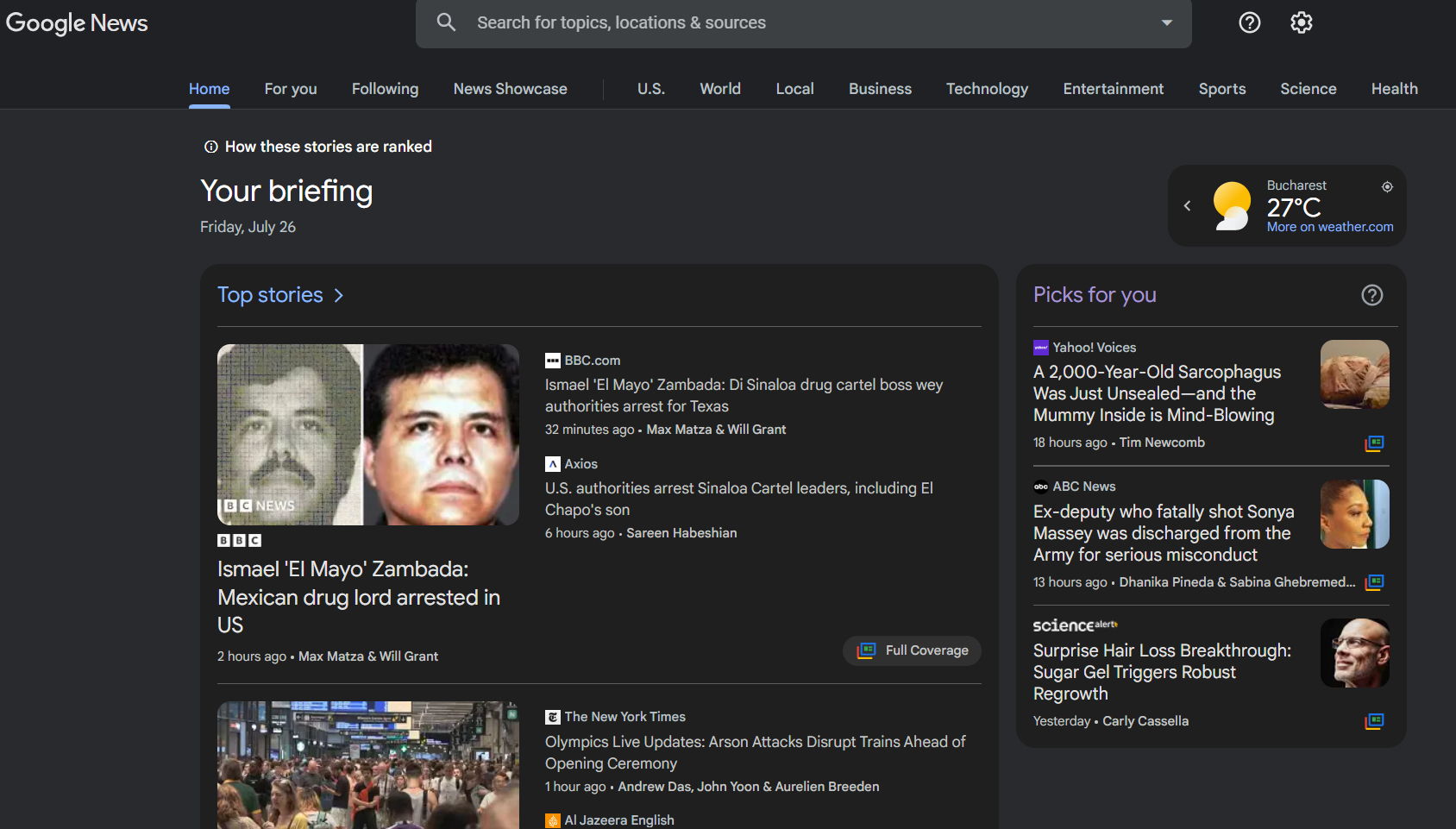

In todays world that heavily relies on data keeping abreast of the news is vital for both individuals and businesses. While Google News serves as a platform, for staying informed about current events and trends manually sifting through and analyzing its data can be laborious and time consuming.

This is where web scraping comes in handy. In this guide we will delve into the article to know more about how to scrape Google News using Python, a versatile programming language that simplifies web scraping even for those new to it. By the end of this tutorial you will have the ability to efficiently extract information from Google News through web scraping techniques.

Why Scrape Google News?

Scouring through Google News can bring about a multitude of advantages in areas. Here are a few important factors to consider when deciding to scrape Google News:

Market Research: Businesses have the ability to leverage data from Google News to gain an understanding of market trends, consumer preferences and industry advancements. Through the analysis of news articles companies can uncover insights on competitors, new technologies and potential opportunities, within the market.

Content Creation: Writers, bloggers and creators of content can use news information to come up with ideas for their content keep up with popular topics and stay updated on whats happening in the world. This can assist them in creating content that’s both relevant and timely, for their readers.

Academic Research: Researchers and students have the opportunity to utilize news data for exploring media coverage examining sentiment and performing sentiment analysis on different subjects. This can contribute to gaining insights into the influence of happenings and the medias influence, on public perspectives.

Sentiment Analysis: Studying the emotions expressed in news stories allows companies and researchers to understand how the public feels about events, products or policies. This information is valuable, for managing brands analyzing politics and studying market attitudes.

Is it Legal to Scrape Google News?

Before delving into the details it is important to take into account the legal implications of web scraping. The legality of how to scrape Google News is influenced by factors such as: the terms of service of the website and how the data will be used.

Terms of Service

Google typically doesn’t allow access to its services without authorization, in their terms of service. If you scrape Google News, you potentially can breach these terms especially if its done extensively or for business reasons.

Fair Use

When utilizing gathered information, for purposes academic studies or small projects it is typically viewed as fair use and is unlikely to lead to legal complications. Nonetheless it’s crucial to adhere to copyright regulations and the websites terms of service.

Ethical Considerations

While scraping may be permissible, from a standpoint it’s crucial to take into account the ethical ramifications. The guidelines of the website refrain from servers with an abundance of requests and handle the data conscientiously.

What Data Attributes Do We Need?

When you scrape Google News, the aim is to gather useful details from the search outcomes. These are the data points we usually target for collection:

- Headline: The title of the news article.

- Source: The publisher of the news article.

- Date: The publication date of the news article.

- Summary: A brief summary or snippet of the news article.

- URL: The link to the full news article.

How to Scrape Google News with Python

To scrape Google News effectively, we need to follow a structured approach. Here’s a high-level overview of the process:

- Set Up the Environment: Install necessary Python libraries and tools.

- Send HTTP Requests: Access Google News search results.

- Parse HTML Content: Extract relevant data from the web pages.

- Store and Analyze Data: Save the extracted data for further analysis.

Step 1: Set Up the Environment

Let’s start by preparing our Python setup which involves installing the required libraries. We will utilize requests to send HTTP requests and BeautifulSoup to parse HTML content. You can easily install these libraries using pip:

pip install requests

pip install beautifulsoup4

Step 2: Send HTTP Requests

We’ll send an HTTP request to Google News and retrieve the search results. Here’s a basic example:

import requests

def get_google_news(query):

url = f"https://www.google.com/search?q={query}&tbm=nws"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36"

}

response = requests.get(url, headers=headers)

if response.status_code == 200:

return response.text

else:

return None

query = "latest technology news"

html_content = get_google_news(query)

We’ve created a function called get_google_news in this code. It sends an HTTP GET request to Google News with a query. The headers parameter is included to simulate a browser request, which helps prevent getting blocked by Google.

Step 3: Parse HTML Content

Using BeautifulSoup, we can parse the HTML content and extract the desired data attributes:

from bs4 import BeautifulSoup

def parse_news(html_content):

soup = BeautifulSoup(html_content, "html.parser")

articles = soup.find_all("div", class_="dbsr")

news_data = []

for article in articles:

headline = article.find("div", class_="JheGif nDgy9d").get_text()

source = article.find("div", class_="XTjFC WF4CUc").get_text()

date = article.find("span", class_="WG9SHc").get_text()

summary = article.find("div", class_="Y3v8qd").get_text()

link = article.a["href"]

news_data.append({

"headline": headline,

"source": source,

"date": date,

"summary": summary,

"link": link

})

return news_data

news_data = parse_news(html_content)

We have a function called parse_news that utilizes BeautifulSoup to analyze the HTML content fetched through our HTTP request. The function locates all news pieces on the webpage. Retrieves the title origin, publication date, brief description and URL, for each article. This information is then saved in a collection of dictionaries.

Step 4: Store and Analyze Data

Finally we are able to save the collected information in a manner like storing it in a CSV file or a database for future examination. Here is a guide, on how to store the data in a CSV file:

import csv

def save_to_csv(news_data, filename="google_news.csv"):

keys = news_data[0].keys()

with open(filename, "w", newline="", encoding="utf-8") as output_file:

dict_writer = csv.DictWriter(output_file, fieldnames=keys)

dict_writer.writeheader()

dict_writer.writerows(news_data)

save_to_csv(news_data)

We have created a function called save_to_csv in the code, which stores the news data that has been extracted into a CSV file. To write the list of dictionaries, to the CSV file we utilize the csv.DictWriter class, where the keys of the dictionaries are used as column headers.

Handling Pagination

Google News search results are usually displayed on, than one page. To extract information from pages it is important to manage pagination. Here’s a way to adjust the get_google_news function to incorporate pagination:

def get_google_news(query, num_pages=5):

news_data = []

for page in range(num_pages):

url = f"https://www.google.com/search?q={query}&tbm=nws&start={page*10}"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36"

}

response = requests.get(url, headers=headers)

if response.status_code == 200:

html_content = response.text

news_data.extend(parse_news(html_content))

else:

break

return news_data

query = "latest technology news"

news_data = get_google_news(query)

In this piece of code we include a num_pages input in the get_google_news function to designate the quantity of pages to gather data from. The URL is modified to encompass the start parameter, which denotes the position, for the search outcomes on every page.

Avoiding Detection

When gathering information from websites it’s crucial to evade detection in order to steer clear of getting blocked. Here are a few suggestions, on how to go undetected while collecting data from Google News:

Use Proxies: Utilizing proxies can assist in spreading out your requests among IP addresses increasing the difficulty for the website to identify and restrict your scraping activity. You have the option to make use of providers such as IPWAY.

Randomize Request Intervals: Frequent requests sent in a pattern could indicate automated scraping. To appear human like and avoid detection try adding random delays, between requests to simulate natural behavior.

import time

import random

def get_google_news(query, num_pages=5):

news_data = []

for page in range(num_pages):

url = f"https://www.google.com/search?q={query}&tbm=nws&start={page*10}"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36"

}

response = requests.get(url, headers=headers)

if response.status_code == 200:

html_content = response.text

news_data.extend(parse_news(html_content))

else:

break

time.sleep(random.uniform(1, 5)) # Random delay between 1 to 5 seconds

return news_data

Rotate User Agents: Switching up user agents can be useful in dodging detection by mimicking requests from browsers and devices. Utilizing tools such, as useragent can make rotating user agents a breeze.

from fake_useragent import UserAgent

def get_google_news(query, num_pages=5):

news_data = []

ua = UserAgent()

for page in range(num_pages):

url = f"https://www.google.com/search?q={query}&tbm=nws&start={page*10}"

headers = {

"User-Agent": ua.random # Random user agent

}

response = requests.get(url, headers=headers)

if response.status_code == 200:

html_content = response.text

news_data.extend(parse_news(html_content))

else:

break

time.sleep(random.uniform(1, 5)) # Random delay between 1 to 5 seconds

return news_data

Scrape Google News through Google SERPs

Scanning Google News can be done successfully by focusing on the search engine results pages of Google (SERPs). When we search Google News using keywords we can gather and analyze the search results to obtain the necessary data details. This procedure includes tasks such, as sending HTTP requests analyzing the HTML content, managing pagination and saving the gathered data. Lets explore each of these steps further.

Understanding Google SERPs

When you search on Google the results page shows a lineup of websites that match your search words. If you look up news on Google the results page not shows headlines but also includes details like where the news comes from when it was published, brief summaries and links to read more. To gather this data we have to examine how Google presents the information, in its web pages.

Step-by-Step Process to Scrape Google News through Google SERPs

Step 1: Send HTTP Requests

To check out the news on Google you have to start by sending a request through the web, to Googles search page with the right search terms. Here’s a basic example:

import requests

def get_google_news(query):

url = f"https://www.google.com/search?q={query}&tbm=nws"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36"

}

response = requests.get(url, headers=headers)

if response.status_code == 200:

return response.text

else:

return None

query = "latest technology news"

html_content = get_google_news(query)

In this piece of code we create a function called get_google_news which accepts a search term as an argument. Builds the URL for searching Google News. The headers section plays a role by imitating how a browser request works, which aids in evading detection and potential blocking from Google.

The function then makes an HTTP GET request, to the generated URL. Retrieves the HTML content of the search outcomes if the request is completed successfully.

Step 2: Parse HTML Content

After obtaining the HTML content of the search results we can utilize BeautifulSoup, an used Python library designed for parsing HTML and XML documents to retrieve the necessary data attributes. Here’s how to do it:

from bs4 import BeautifulSoup

def parse_news(html_content):

soup = BeautifulSoup(html_content, "html.parser")

articles = soup.find_all("div", class_="dbsr")

news_data = []

for article in articles:

headline = article.find("div", class_="JheGif nDgy9d").get_text()

source = article.find("div", class_="XTjFC WF4CUc").get_text()

date = article.find("span", class_="WG9SHc").get_text()

summary = article.find("div", class_="Y3v8qd").get_text()

link = article.a["href"]

news_data.append({

"headline": headline,

"source": source,

"date": date,

"summary": summary,

"link": link

})

return news_data

news_data = parse_news(html_content)

In the parse_news function we utilize BeautifulSoup for parsing the HTML content. By using the soup.find_all method we search for all div elements, with the class dbsr that hold the news articles.

For every article we retrieve the title, source, publication date, summary and link by recognizing the HTML tags and classes. This information is stored in a collection of dictionaries, where each dictionary corresponds to a news article.

Step 3: Handle Pagination

Google News search results are usually displayed on pages. To extract information from pages we must manage pagination by going through each page and adjusting the query URL as needed. Here’s a way to update the get_google_news function to incorporate pagination:

def get_google_news(query, num_pages=5):

news_data = []

for page in range(num_pages):

url = f"https://www.google.com/search?q={query}&tbm=nws&start={page*10}"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36"

}

response = requests.get(url, headers=headers)

if response.status_code == 200:

html_content = response.text

news_data.extend(parse_news(html_content))

else:

break

return news_data

query = "latest technology news"

news_data = get_google_news(query)

This code now allows the get_google_news function to take in a parameter, which tells how many pages to gather information from. The URL is modified to include the start parameter indicating where the search results begin on each page. By going through the designated number of pages we can collect news data from sources.

Step 4: Store and Analyze Data

Finally, we need to store the extracted data in a structured format, such as a CSV file or a database, for further analysis. Here’s how to save the data to a CSV file:

import csv

def save_to_csv(news_data, filename="google_news.csv"):

keys = news_data[0].keys()

with open(filename, "w", newline="", encoding="utf-8") as output_file:

dict_writer = csv.DictWriter(output_file, fieldnames=keys)

dict_writer.writeheader()

dict_writer.writerows(news_data)

save_to_csv(news_data)

In this piece of code the save_to_csv function processes a collection of dictionaries (referred to as news data) and stores it in a CSV file. To accomplish this the csv.DictWriter class is employed to write the list into the CSV file utilizing the keys within the dictionaries, as column headings. This organized data can be utilized for a range of analyses including sentiment assessment, trend evaluation or crafting content.

Conclusion

Scanning Google News using Python is a method to gather up to date information for a range of purposes such as market analysis and content development. This piece discusses the benefits of scraping Google News well as the legal and ethical considerations involved. It also offers a guide, on how to programmatically access news on Google.

By following these instructions, you can develop your tool to scrape Google News responsibly and effectively. Remember to apply this knowledge and adhere to the terms of service of the websites you extract information from.

Take your data scraping to the next level with IPWAY’s datacenter proxies!