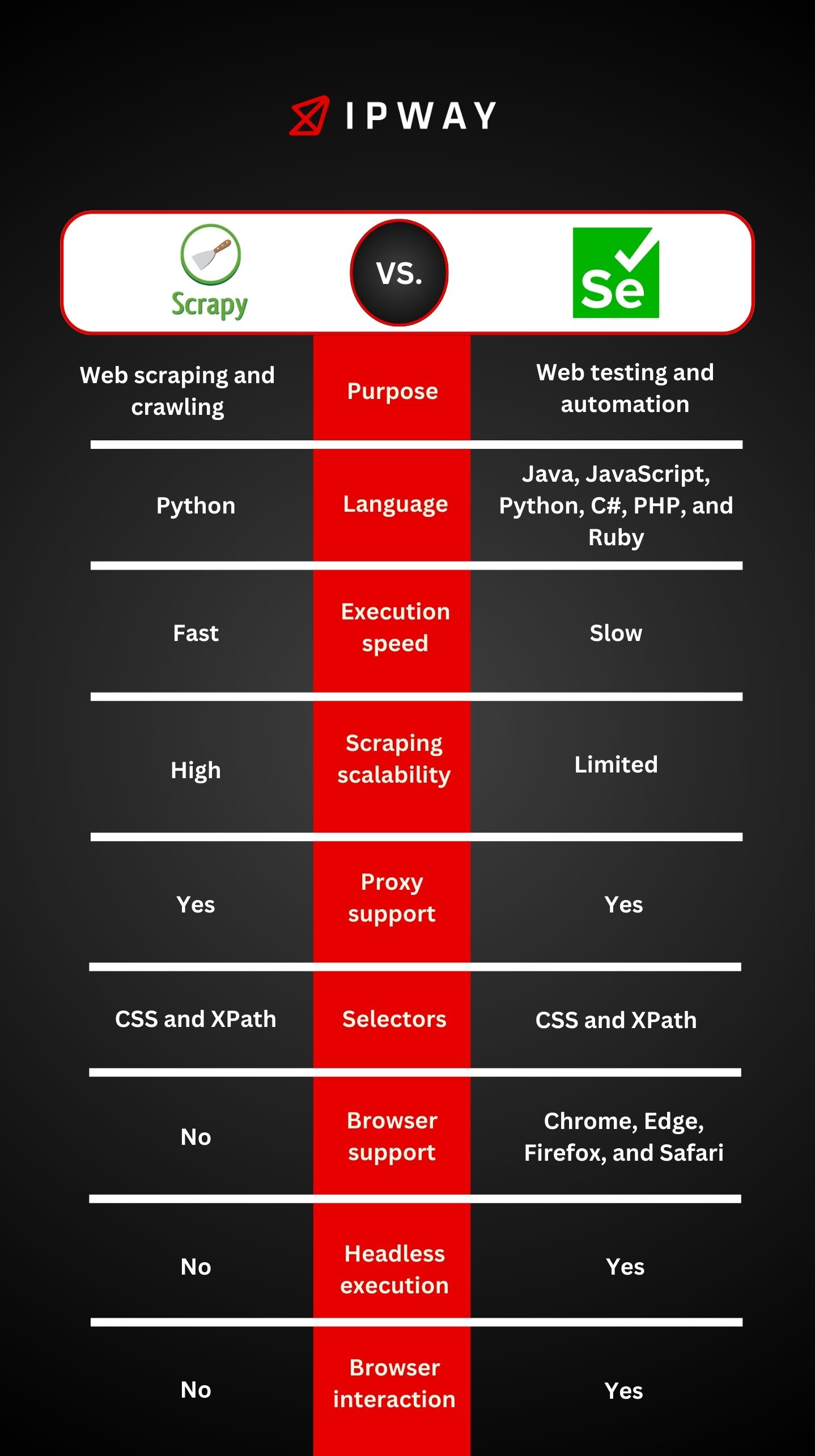

Scrapy vs. Selenium: When it comes to web scraping Scrapy and Selenium are both tools but they have distinct methods and features. Scrapy, a Python framework tailored for web scraping excels in speed and scalability. It works by sending HTTP requests and parsing HTML responses making it perfect for extracting organized data from websites with ease. In contrast Selenium serves as a web automation tool that enables users to mimic interactions, with web browsers.

What’s the difference between Selenium and Scrapy?

Scrapy vs Selenium serve purposes in web scraping. Scrapy functions as a web crawling framework focused on data extraction from websites while Selenium is tailored for automating web browsers to handle tasks like simulating user interactions such, as clicking or scrolling on web pages.

What is Scrapy?

Scrapy, an open source framework is designed specifically for browsing and extracting information from websites. Tailored for Python, its efficient way of functioning, easy to use design and impressive speed make it ideal for projects of all sizes large scale ones. While primarily used for web scraping Scrapy shows its adaptability by being utilized in ways, like testing the load on web servers.

Is Scrapy still used?

Scrapy has attracted a lot of interest. Its well deserved. Being a powerful web scraping tool it allows for multiple requests simultaneously while offering a user friendly experience. This enables developers to take on scraping tasks of varying scopes without going over their budget.

What is Selenium?

Selenium, a framework that’s free to use provides a comprehensive set of tools for testing and automating web processes on different browsers and devices. With support for programming languages Selenium empowers users to control browser activities and handle web components.

This functionality enables a variety of automated actions within the browser such as clicking buttons selecting items, from dropdown menus entering text into fields navigating websites and performing tasks based on browser interactions.

Scrapy vs Selenium: pros and cons

When choosing between Scrapy and Selenium, for web scraping it’s crucial to evaluate their strengths and weaknesses. Both tools have capabilities and perform best in specific scenarios so being aware of these differences can greatly influence the outcome of your project.

Pros and Cons of Scrapy

Pros

High Efficiency: Scrapy runs on a system that enables it to handle requests and responses effectively without having to wait for tasks to finish. This results in a speed boost particularly when dealing with extensive scraping projects.

Optimized for Web Scraping: Designed with a focus, on web scraping Scrapy provides a variety of installed features and tools to manage cookies, sessions and HTTP headers. It also facilitates the extraction and manipulation of data.

Extensible Architecture: The structure of Scrapy is very adaptable. Can be customized extensively. Programmers have the flexibility to enhance its functions either by using plugins or by integrating libraries directly to broaden its features.

Low Resource Usage: Scrapy unlike tools that operate within a browser does not require the loading of JavaScript and other frontend resources. This helps save processing capabilities.

Cons

Limited JavaScript Support: When using Scrapy it mainly retrieves information, from the HTML data obtained through HTTP requests. It may face challenges when dealing with websites that heavily depend on JavaScript for displaying their content.

Steep Learning Curve: Scrapy is quite robust. It can be challenging to learn because of its complex structure and the requirement to understand XPath or CSS selectors for extracting data.

Pros and Cons of Selenium

Pros

Complete Browser Automation: Selenium is capable of automating browser tasks like clicking submitting forms and scrolling. These actions are crucial, for engaging with web pages that display content based on user interactions.

Ideal for Dynamic Websites: It has the ability to display web pages like a person would performing tasks such as running JavaScript and AJAX requests. This makes it ideal, for extracting data from websites that load content dynamically.

Cross-browser Compatibility: Selenium is compatible with the popular web browsers and their corresponding drivers allowing for testing and data extraction across various browser platforms without the need, for extra configuration.

Visual Debugging: Selenium’s feature of displaying the scraping process in an actual browser window makes it easier to troubleshoot and monitor the actions of web applications.

Cons

Performance: Using browser instances in Selenium can cause it to run slower and require more resources compared to Scrapy. This may result in execution durations and increased resource usage, which can be particularly challenging when scraping large amounts of data.

Complex Setup for Scalability: Working with Selenium, for automating web browsers is quite effective. When it comes to scaling Selenium scripts across various instances and handling multiple browser sessions simultaneously it can become challenging and demanding on resources.

Higher Maintenance: Browser updates might necessitate adjustments, to Selenium scripts and browser drivers potentially adding to the maintenance workload.

Web scraping features of Scrapy vs Selenium

Although they have their limitations both tools have qualities that make Scrapy and Selenium powerful, in different situations:

Scraping with Scrapy

Spiders

- Spiders are, like guidelines that determine the way a website or a group of websites should be browsed and analyzed. This function allows for flexible web scraping.

Requests and responses

- Scrapy offers features like networking, prioritizing requests, scheduling tasks, automatic retries for requests as well as built in tools, for handling redirects, cookies, sessions and common errors encountered during web scraping.

AutoThrottle

- This tool allows for the adaptation of crawling speed by taking into account the workload of both Scrapy and the server of the website being accessed. This helps ensure that your web scraping activities do not put strain on the target site unlike when using the standard crawling speeds.

Items

- The data that has been extracted is shown as items, displayed as Python objects with key value pairs. You have the flexibility to personalize and modify these items to match your data needs. This feature makes it easier to access and work, with data.

Item pipeline

- Item pipelines allow for data processing prior to its export and storage. They assist in performing a range of functions, such as validation, cleansing, alteration and the eventual storage of data, in databases.

Selectors

- Scrapy provides support, for XPath and CSS selectors giving you the flexibility to navigate and select HTML elements. This capability enables you to make use of both techniques enhancing the performance of web scraping.

Feed export

- This feature lets you export data in ways using different serialization formats and storage systems. The standard export options include JSON, JSON lines, CSV and XML. Furthermore you have the option to add formats using the feed export function.

Additional Scrapy services

- To improve the functionality of your scraper consider utilizing integrated features, like tracking events gathering statistics sending emails and accessing the telnet console.

Scraping with Selenium

Dynamic rendering

- Selenium uses a browser driver to access the content of web pages allowing it to display JavaScript and AJAX based data smoothly. It runs scripts, waits for elements to load on the page and engages with content making it a preferred tool for extracting information, from dynamic web pages.

Browser automation

- Selenium allows you to replicate actions in your web interactions bypassing anti bot detection mechanisms effectively. Additionally Selenium can automate browser activities such, as clicking buttons typing text handling pop ups and notifications and even solving CAPTCHAs.

Remote WebDriver

- You can use Selenium to run your script, on devices allowing for project scalability and executing tasks simultaneously.

Selectors

- Like Scrapy Selenium uses XPath and CSS selectors to navigate and select HTML elements.

Browser profiles and preferences

- You can. Set up different browser profiles and settings like cookies and user agents. This feature allows you to improve your web scraping efforts, for results.

Can Scrapy and Selenium be used together?

Yes they certainly can. There are situations where using both tools could be beneficial. Scrapy has limitations when it comes to fetching loaded content from websites, especially those powered by JavaScript or AJAX. In cases Selenium can come in handy by first loading the website in a browser and then capturing the page source along, with the dynamically generated data.

Using Scrapy along, with Selenium can be handy when you need to interact with a website to get data. Selenium helps automate user actions. Fetch the webpage source, which can then be passed on to Scrapy for further handling.

Conclusion

When deciding between “Scrapy vs. Selenium ” the choice can be complex. Depends greatly on the needs of your web scraping project. Scrapy is great for efficient data extraction, from various pages whereas Selenium shines in its capability to engage with dynamic content. To get the most out of your projects think about leveraging their strengths in situations where you require both efficient data extraction and interaction.

Picking the tool can have a significant impact, on how successful your scraping projects turn out. By grasping the strengths and weaknesses of Scrapy and Selenium developers can effectively utilize web scraping to generate business insights and make informed decisions.

Discover how IPWAY’s innovative solutions can revolutionize your web scraping experience for a better and more efficient approach.